This post continues the Spotlight on the Suicides series.

There were 8 named people in Stephen O’Neill’s medical record, or who made entries in the record between the time of first admission and his death. He appears to have told most of them that in his view the sertraline he had been put on and took two doses of had had a disastrous effect on him. There were likely up to 20 others or more whom he had contact with and probably said much the same thing to.

No-one heeded him. There was an obvious course of action had they listened – stop all meds, agree with his diagnosis and tell him this would settle. It would have settled, perhaps facilitated by diazepam or the lorazepam he was briefly given, but even without this it would have settled.

The pharmacist who filled his original prescription was the first person he turned to when things began to go wrong – before going to hospital. She was the only person who appears to have listened.

At various points over the following 6 weeks Stephen told visiting secondary care staff and others in the day hospital or outpatient settings that he was thinking of suicide following changes in his drugs. This led to the addition of further drugs and finally Buspirone.

Buspirone is a failed predecessor of the SSRIs and is closely related to them. One of the surprises in Stephen’s case was that anyone thought to give it to him – it is rarely prescribed now. Anyone who had reacted with agitation and suicidality to an SSRI was on the face of it more likely to react in a similar way to buspirone than to the mirtazapine or quetiapine he was also tried on. A few days after being put on buspirone Stephen was dead.

When they came to review what had happened the secondary care services patted themselves on the back. Every thing they had prescribed was, they said, recommended by NICE Guidelines. Everyone who had been supposed to liaise with anyone else had all liaised with all the people they should have liaised with. All boxes had been ticked. The service had done a wonderful job. No one seems to have passed on the message they were given by Stephen.

Spotlight

In the 1980s, a concern about abusive clergy emerged in Ireland. It led to the fall of the Irish Government and triggered a crisis that engulfed the Catholic Church worldwide.

Decades later Hollywood in the movie Spotlight twisted the events out of shape – clerical abuse had been discovered by brave journalists in Boston who had gone where no-one else dared go. The truth was the United States was very late in facing up to what was happening, and has been behind the curve ever since. Ireland, who might have been thought to be the least likely to face up to the issue, had grappled with it long before Boston.

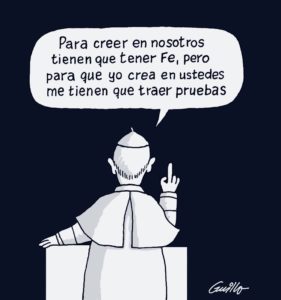

The crisis came to a head during a visit by Jose Mario Bergoglio to Chile in 2018. Facing anger about the Church’s failure to confront child abuse, Bergoglio responded – if you have faith you believe in us [the clergy] but for us to believe in you [the abused] we need proof. The powerful are innocent until proven guilty. The powerless have anecdotes but not evidence.

If you have faith you believe in doctors but for us to believe in your claim a drug has wrecked you we need proof.

Unlike the participants in Stephen O’Neill’s inquest, and thousands of other inquests that have unfolded the same way, Bergoglio appears to some extent to have recognised how his response has added to the original damage.

Beyond the Law

In the case of the Church, the tacit understanding seems to be that the authorities figured their personnel, the clergy, were bound by canon law rather than civil law. Loosely, if the person responsible confessed their sins, God would forgive them and cure them. It was not the place of the authorities to punish them. Even when the trespassing continued, who gave a bishop, archbishop, cardinal or even pope the right to take the place of God and condemn the trespasser.

For physicians today, Guidelines operate the same way. They transcend common sense. If you have adhered to them, you can do no wrong, or no earthly authority is going to find you at fault – “there but for the grace of God go I” as was once commonly said.

Unlike his people beforehand and his church since, God finally figured Guidelines were not the right approach. But the right approach, as became clear, was likely to be painful. This perception that grappling with the issues might be painful underpins the appeal commandments and guidelines have for good and decent people – and why the greatest problem for Christianity has always been good and decent people.

At the inquest, to give them their due, the secondary services appear to have accepted that some of the medicines Stephen was put on could cause akathisia – agitation.

I can’t be certain how this was put because apparently I am not a person entitled to a transcript of the inquest. The coroners conclusion suggests that what he picked up is that there was an acceptance the drug might have caused a relatively minor problem – a dissociative problem – which fed into the longstanding mental difficulties Stephen had from bullying at school (which was minor and of no obvious consequence), through loss of a parent, onwards – the memories of which triggered by agitation linked to the drug caused him to commit suicide.

Now we know why healthy volunteers commit suicide on these drugs. Even healthy volunteers who didn’t know they’d ever been bullied and both of whose parents are still alive. There’s always going to be something a drug can activate – which is the true cause of the suicide.

Pontius Pilate

What’s a coroner to do when caught between warring factions?

One side saying we have kept to all the commandments. This man clearly had an enduring mental illness. And the folk on that side are all pillars of the Establishment.

On the other side, the main offering being the word of a man who is now dead.

Sticking with the Establishment also meant not having to get involved in someone else’s politics. How can he be expected to understand ghost-writing, zero access to trial data – it sounds unbelievable that physicians and their professional bodies would let something like this happen. Better let the Judaeans sort this out for themselves.

What would you do?

Next week Spotlight on the Politicians

In Spotlight, the ‘Hollywood’ version, the Boston Globe, the Chief, protagonist, had received the story about the Molesting clergy, twenty years before. It had been forwarded, but buried, he admitted, near the end..

In new investigations, the molested, were traumatised and very reluctant to speak to anybody, let alone the BG reporters..

Even lawyers were behaving like Dark Horses..

Documents, under seal, had disappeared.

A good story like this couldn’t possibly happen…

Companies like GSK didn’t hire ghostwriters, they didn’t submit data to the FDA which was false, they didn’t hide clinical trials in which there were suicides.

There wasn’t a Study329, sitting, waiting, for the world to realise..

What chance did Stephen have?

Ignoring, seems power for the course..

The Boston Globe thought that 90 clergy, was breathtakingly high, it turned out to be thousands.

At the end of Spotlight, the sweated story, on the front page, of the Boston Globe, all the reporters were at their desks and the phones didn’t stop, and thousands were volunteering their stories …

Apropos Panorama…Emails from the Edge

https://study329.org/video/

What would you do?

Bin the guidelines, total junk, listen to innocent people like Stephen, who lost his life to ineptitude, and too many people keeping the – Faith ..

Fatal consequences …

Christopher Lane @christophlane

The scandal embroiling a paroxetine (Paxil) bipolar study will be taught in medical ethics classes. My latest for @PsychToday https://www.psychologytoday.com/blog/side-effects/201911/the-truth-about-medical-pr …

Christopher Lane Ph.D.

Side Effects

The Truth About Medical PR

A textbook case on medical ethics.

https://www.psychologytoday.com/gb/blog/side-effects/201911/the-truth-about-medical-pr

Posted Nov 14, 2019

Why the episode matters is best summed up by Amsterdam and McHenry: “The integrity of science depends on the trust placed in individual clinicians and researchers and in the peer-review system which is the foundation of a reliable body of knowledge…. Ghostwriting is a serious problem, because it… disguises marketing and public relations objectives of for-profit companies as science, conceals conflicts of interest of named ‘authors’ on manuscripts, misrepresents the results of scientific testing, and, most important, has contributed to fatal consequences in cases in which the safety of drugs are misreported.”

I remarked on another blog in a slightly different context the other week: “They have laws for us, we have no laws for them”.

What would Stephen have done if he had not been in a catch twenty two where no other options were open to him but to carry on relying on this lot , no second independent opinion from someone who wouldn’t be afraid to rock the boat. He had been in hospital seemingly voluntarily but the threat, (possibly bullying) of not taking doctors orders/drugs can lead to incarceration. He had had a flavor of what a mental hospital is like, that may have contributed to his decision. What could most of us have done when encountering the medics for the first time without the knowledge gained by researching the net or realising that it is vital to protect ourselves from harm – from the very people who have the duty of do no harm. There is a limit to how much anybody can be expected to take responsibility when very unwell or still naively trusting so access to advocates might help – even if unwelcome by some medics.

Stephen’s contacts behaved l;ike a flock of sheep although Stephen was ultimately the lamb. They obeyed the first commandment by referring to NICE but disobeyed the final one – thou shalt not kill. NICE is their useful guardian but NICE did not tell them to pile on those drugs one on top of another.,That they say ‘some of the drugs could have caused Akathisia is outrageous ie not just one but some.. even a non medic can see what a vile concoction was delivered to Stephen. Wonder was it by injection which is harder to resist and chuck in the bin.

What ‘they’ do is sacrifice innocent lives The treatment they dished out would not be given to those dear to them. Was Buspirone used as some kind of experiment? Some lives are more precious than others.It was so obviously lethal it is impossible to pretend that it can be defended as ‘following guidelines’ even if that is how they are getting away with it.The errors and ignorance and collusion should lead to them being struck off. Sign on the dole where so many others end up after contact with mal treating medics . UK may be short of doctors but not so short we should allow avoidable deaths to be swept under a coroner’s carpet. So often the decent in society have had to stomach the corrupt self serving way institutions run – the more they are let off the hook the more they will carry on saying how sorry they are, how they did everything possible and lessons will be learned. They rarely are.

If someone from the wrong side of the tracks commits an offence they are made to serve a sentence and are often used as a warning to others. That is unfair – but unless health workers see justice will be done they will continue to look away . A few sentences by the great and good in public will try to shut things down, just as they initially tried in the burning tower block disaster Those who suffered didn’t allow the powerful to shut them up . The push against the abusive way people are treated as disposable has to be relentless in order to hope it will lead to justice for the victims of avoidable harm and suicide and a better informed health service and public..On a practical note there should the right to have an advocate (although they can be undermined too) present at any meeting. Taking notes. No drug should be taken unless full information is given with evidence of consent, and to be told there are hazards to taking it. . People should be told they have a right of a second opinion (although they can collude) Very few people are given any information about the medic they see and how much expertise they may have. That should be available – if someone goes private they have a choice of who they consult. There needs to be more inclusion of teaching by those who have the first hand knowledge of a condition a treatment and students need to be signposted to blogs and forums where people are documenting what is going on. as well as attending ‘user led’ events ,as part of their course.

What would I personally do if I was the Coroner? Well, most followers of this blog won’t like this, but if it’s a genuine question David Healy is putting, i will give you my suggestion. I would do what bankers like J.P. Morgan, and others in the know in the business world often do. I’d go to a really good Medium and through them, have a chat with Stephen, and ask him to give the Coroner some proof. Something undeniable that would rock the Coroner back on his heels. Because there will be something.

This is a taboo subject never freely discussed. Yet, you would be amazed how many of us bereaved have done this, and what insights we have obtained. We keep this very very quiet, for fear of being judged as crackers, just like people keep quiet about their experiences on SSRIs for fear of their doctor’s branding them as mad and getting nasty. A few dare to mention it to each other behind closed doors. But for those in the know, it’s a fantastic resource. You have to be very discerning about the ability of the Medium though, as there are rogue ones, just like their are rogue doctors, but some are brilliantly talented and can indeed offer proofs. In my opinion. So Stephen will still have a voice.

Susanne, really brilliant insightful comment, thank you. Yes, Stephen (like our son), feeling unwell and shaken by his experience of incarceration and possibly frightened by unenlightened people treating him (not listening time and time again when he told them the effect the drugs were having on him), seeing all this, he could have decided death was the only way to peace. I know our son did. Unquestionably.

Interesting too, your words ‘if someone goes private they have a choice of who they consult’.the NHS introduced ‘Patient Choice’ when Tony Blair was PM. With great trumpeting of improving the NHS, everyone could ‘choose and book’ etc. But, guess what, as we ourselves found for Olly, IT DOESNT APPLY TO MENTAL HEALTH.

I was at a Compassionate Mental Health event this week. (With Bob Whittaker, as it happened, he gave two riveting talks, what a fantastically inspiring guy). Afterwards, chatting with a lady ex-journalist, we discussed the difference between treatment when you have cancer, with how you are treated when you are mentally ill. She had experienced both. She said they were streets apart. Told to shut up and do as she was told when in a mental hospital, but treated with great respect and kindness when she was in a general hospital for her cancer. Other delegates, hearing this, suggested she write an article detailing the differences. She said she just might.

The greatest tragedy of our age, this dealing out of poisons without a sense of responsibility by those who have this power invested in them, for the terrible, in some cases irrevocable results. Stephen was just one of so so many. Their own power is taken away, their feelings trampled underfoot, and after deaths like his, the family try to get justice but those who put the drugs into him stand together and block every pathway to get true appreciation of what really happened. ‘Lessons learned’….don’t make me laugh. If they are, then why does this happen again and again and again. Absolute power corrupts. But they won’t win for ever, and there will be a day of reckoning when Stephen and our son will face them with the power on THEIR side. I shall love to see it. I hope I’m there too.

Come the day I hope you’re there too Heather – there won’t be a hall big enough

Susanne, they have NO idea what awaits them. If they had, they’d been working their hardest to turn things around, blow the whistle on all they’ve done to obfuscate and ruin so many lives, and try in some small way to make amends whilst they still can. I almost feel sorry for them. Almost. They will suffer exactly as those they’ve caused suffering to, they will feel themselves exactly what they’ve unleashed on others, with the clear realisation at the same time, that this never needed to happen had they behaved with courage, honesty and human decency.

If I may, I’d like to make one further comment which I feel is still relevant here. My son’s situation was very similar to Stephen’s. He went into hospital for a few days in 2012 as a voluntary patient because he had repetitive thoughts telling him to kill himself (he was on escilatropram that year briefly, following isotretinoin initially for acne). He was puzzled by these terrifying suicidalthoughts which seemed to come out of nowhere. He had an incredibly successful creative web design business, a good relationship with a relaxing girlfriend and her family, and with us. There was no apparent reason for his anxiety and suicidal thoughts. Outwardly he appeared controlled, witty, utterly normal, but to us and hospital staff he confided the horrors he was battling in his mind. This, it turned out, was his undoing. A cocktail of drugs lobbed in one after another, drove him into AKATHISIA. No one seemed bothered, except us and him.

The Home Treatment Psychiatrist he had the great misfortune to cross the path of, only met him twice, for two short interviews. This man, it appears, was well known by staff and patients alike as a self-opinionated bully who did not like to talk to Carers – Open Dialogue would have been anathema for him. He had no patience with ‘low level’ mental illness. By his brutish yet hypnotic behaviour, stopping the meds cold turkey, heaping shame on our vulnerable and trusting son, witnessed by us and The Team, he, in our opinion, drove our son to end his life, having lost all dignity and hope.

So WHY did those many staff in the Trust not mount a whistleblowing operation and thus save lives? We’ve met several since who knew exactly what was happening and say they were AFRAID of this man! Apparently one person tried. Why then did they turn their backs and maybe hide behind each other. I was a social worker in a busy London hospital in my 20s. Our head of department was a feisty woman of principle. She taught me to stand up for what was right, and not to fear the consequences. In Stephen’s case, and that of my son, people in the various professions who knew what happened to him, did NOT have the courage to do that, although maybe we can see that some tried. Have ethics changed from the 1970s to now? Why did we not readily cover up then what we do now? Has the moral compass simply got swept away by greed and arrogance? Neither the NHS Trust nor the GMC gave us any indication that our son’s death would change things for the better, he was like a fly, swotted off their guideline sheets. They muttered and fluttered but took no responsibility for his horrid experience of treatment, and driving him to death. He was one of so so many. My fury persists. I feel impotent, just as no doubt so many, including Stephen’s family do.

Why have so many become so spineless about standing up for what they know is the TRUTH? What would regenrate their backbones? What would they fear enough to make them behave with honesty at last? Any ideas?…

I’m very interested in reading more about the activation of memories referred to.

“Stephen had from bullying at school (which was minor and of no obvious consequence), through loss of a parent, onwards – the memories of which triggered by agitation linked to the drug caused him to commit suicide. Now we know why healthy volunteers commit suicide on these drugs. Even healthy volunteers who didn’t know they’d ever been bullied and both of whose parents are still alive. There’s always going to be something a drug can activate – which is the true cause of the suicide.”

Apologies for confusing you Barbara or anyone else who read this the same way. It was supposed to be ironical. In other words I think this idea about activation of memories is utter crap. These drugs can have a direct toxic effect – no memories needed. No-one talks about memories if they have too much too strong coffee – they talk about being poisoned

D

55 cups of black coffee …

The Hindmarch study was never published. I got to hear about it in 1998 from Ian Hindmarch himself when we were chatting about an article in the New Yorker by Andrew Solomon that compared the agitating effects of Zoloft to drinking 55 cups of black coffee. Hindmarch said he’d been involved in a study that mapped onto just what Solomon was describing.

https://davidhealy.org/zoloft-study-mystery-in-leeds/

How might the story of antidepressants and suicide have unfolded had this Zoloft study by Dr. Hindmarch been in the public domain?

WARNING: SUICIDAL THOUGHTS AND BEHAVIORS

https://www.pfizer.com/products/product-detail/zoloft

http://labeling.pfizer.com/ShowLabeling.aspx?id=517

Closely monitor for clinical worsening and emergence of suicidal thoughts and behaviors (5.1)

https://www.nhs.uk/medicines/sertraline/

Page last reviewed: 12 December 2018

Next review due: 12 December 2021

– see Volunteer.

The ABPI (Association of the British Pharmaceutical Industry) launches its 20/20 manifesto – in partnership with the British government and the NHS. You don’t even have to elect them since they have elected themselves anyway (and you thought you were worried about Donald Trump!). Government of the pharma by the pharma for the pharma in saecula saeculorum.

https://www.abpi.org.uk/media/7695/abpi-2020-manifesto-for-medicine.pdf

Thank you for this.

Rereading Whitaker living with the devastation of what a drug has done to me. I have lost everything – and live with multiple forms of violence in my life related to coercion to consume the drug and the violence that the drug has reaked on my body and mind – and facing reduced life expectancy and not living much longer. It has been a poverty pill all along for me and now I do not have enough for basics like food and am excluded from education, work – economic abuse thus limiting my actions and the necessary resources to resist the abuse that comes with prescriber and pharmacological abuse. etc. I cannot bare to keep going and cannot bare the trauma of the medical industrial complex any longer. Thank you for being in the world. I really appreciate your words which give me space and dignity like Tina Minkowitz, Alphonso Lingis and Alan Clements – where other do not. Love your words so much like Tina, Alphonso and Alan.

In order to bring this issue to the fore, I think prescribers accountability would depend on the outcome of a postmortem, provided the combination of the following tests are implemented. Firstly, there needs to be a genotype test to ascertain whether the deceased metabolised the psych drugs effectively. Secondly blood toxicology for all medications prescribed. The coroner may do toxicology testing thereby showing an overdose, but in the absence of the genetic profile, this outcome is meaningless for relatives.

The next of kin can ask the coroner/police for toxicology testing & can refuse for the body to be released to the undertaker until this testing is assured. At this point it is likely coroners will then use coercion i.e. charge the next of kin for body storage in order to make you change your mind. There are independent labs (not NHS) for testing which police use. It may be neccessary to engage a solicitor to instruct the police to confirm confirmation re toxicology tests.

The outcome of inadvertant over prescibing:

a) will come to the fore

b) get justice for relatives

c) ensure genetic testing is routinely carried out prior to prescribing for everyone – in general medicine as well as MH

And… we have another school shooting here in the US. Literally just thinking 2 days ago driving home from work how it’s been a few weeks since the last one and how it reliably would happen again. Knowing it’s a dream to think it won’t. Thoughts and Prayers. Enter the politicians.

More unspeakable crap….

Prescription AI

This series explores the promise of AI to personalize, democratize, and advance medicine—and the dangers of letting machines make decisions.

Illustration of how artificial intelligence could predict suicide.

ZACK ROSEBRUGH FOR QUARTZ

ART OF INTELLIGENCE

Machines know when someone’s about to attempt suicide. How should we use that information?

By Olivia GoldhillSeptember 5, 2018

A patient goes into the emergency room for a broken toe, is given a series of standardized tests, has their data fed into an algorithm, and—though they haven’t mentioned feeling depressed or having any suicidal thoughts—the machine identifies them as at high risk of suicide in the next week. Medical professionals must now broach the subject of suicide with someone who hasn’t yet asked for help, and find some way of intervening.

This scenario, where an actionable diagnosis comes not from a doctor’s evaluation or family member’s concern, but an algorithm, is an imminent reality. Last year, data scientists at Vanderbilt University Medical Center in Nashville, Tennessee, created a machine-learning algorithm (paywall) that uses hospital-admissions data, including age, gender, zip code, medication, and diagnostic history, to predict the likelihood of any given individual taking their own life. In trials using data gathered from more than 5,000 patients who had been admitted to the hospital for either self-harm or suicide attempts, the algorithm was 84% accurate at predicting whether someone would attempt suicide in the next week, and 80% accurate at predicting whether someone would attempt suicide within the next two years.

Colin Walsh, the Vanderbilt data scientist who led the project, wants to see this algorithm put to widespread use. Currently, most people in the US are only assessed for suicide risk when they actively seek psychiatric help, or exhibit clear-cut symptoms such as self-harm. Walsh hopes his algorithm will one day be applied in all medical settings, in order to catch depressive behavior early on, and give doctors the ability to proactively offer care to those who haven’t yet asked for it or shown obvious symptoms.

ZACK ROSEBRUGH FOR QUARTZ

He’s not the only one interested in algorithms’ ability to predict suicide risk. Facebook has also developed pattern-recognition algorithms that will monitor users’ posts for risk of suicide or harm, and connect them with mental health services if necessary. The two projects rely on different data: Walsh has access to actual medical records, versus Facebook’s reliance on social media activity. Walsh’s project is also crucially different in that, by integrating with the US medical system, it opens up far more possibilities for how to respond to risk. At most, Facebook can put people in touch with mental health services, whereas doctors already have patients within their care and can offer an array of interventions and treatment programs.

Walsh is currently working with doctors to develop such an intervention program based on this algorithm. If the AI deems a patient to be at high risk of suicide, they might be asked to spend several days in a hospital under supervision. In certain cases, a doctor might deem hospitalization necessary regardless of whether or not the patient volunteers to be admitted. In lower-risk cases, the patient would be told of their analysis, and given information about available therapists and treatment plans. They would be offered an appointment with a psychiatrist and, a day or so after they’ve been discharged, a member of the medical facility would give them a call to check on their mental health.

This binary division of risk levels is imperfect, the Vanderbilt team acknowledges. For example, there are patients at intermediate risk, who are not clearly in immediate crisis, but are at considerable risk of suicide within a few weeks or months.

“We know we won’t get it right the first time,” says Walsh. “We’re trying to be transparent about that.” Nevertheless, he expects the program will be ready to roll out for clinical use in hospitals within the next two years.

Walsh consulted Warren Taylor, a psychiatrist and professor at Vanderbilt Psychiatric Hospital, on how the algorithm could work in practice, and he says the broken-toe scenario is conceivable. It’s fairly standard for someone to come in for a physical injury and leave with a diagnosis and prescription for extensive treatment for something else, Taylor says, often a previously undiagnosed addiction.

Most doctors are accustomed to keeping an eye out for the sorts of undiagnosed mental health conditions commonly seen in their fields, and psychiatrists are taught to assess suicide risk even among patients who don’t voluntarily mention suicidal thoughts. When they see patients at scheduled appointments, they consider factors such as demographics, medical history, and access to guns. Nevertheless, even psychiatrists are little better than a coin toss at predicting suicide attempts. And, Taylor notes, psychiatrists only have limited contact with many of the patients who are at risk of suicide; historically, he says, many of those who do attempt suicide often saw a doctor in the previous month, but comparatively few saw a psychiatrist.

“At the end of the day, we’re only human and our predictive powers are poor,” says Taylor. As such, the algorithm has the potential to make a drastic difference. The fact that so many suicidal patients see a doctor, if not a psychiatrist, during the critical period of their lives, means that the algorithm could be used among those many patients come into contact with the medical system but currently aren’t assessed for risk of suicide. If an AI with an 80% prediction success rate is used to assess risk of suicide every time a patient comes into contact with medical care, then, in theory, we would be able to predict, treat, and hopefully prevent far more suicide attempts.

Doctors aren’t used to relying on machines to make such delicate decisions, but Taylor is open to trying them out. “Anything that could help give us more information is something we should include in evaluation,” he says.

ZACK ROSEBRUGH FOR QUARTZ

Though useful, the algorithm will likely raise ethical issues that cannot be foreseen or resolved until they play out in practice. “It’s not really outlined in our ethical or practice guidelines how to use AI yet,” McKernan says. For example, if, as Walsh hopes, all emergency room visitors are automatically run through the suicide-prediction algorithm, should they be told this is happening? McKernan says yes: all patients must be told that hospitals are monitoring data and assessing suicide risk, she adds. If a computer algorithm deems them to be at risk, they should know to expect a call.

As with all medical data, the question of who has access to the information raises another ethical minefield. In the case of the algorithm, Walsh says only doctors and those hospital staff treating the patient would know about the algorithm’s assessment; insurance companies and government bodies would not be privy to such knowledge.

It’s not yet obvious exactly what steps health care providers should take when a machine decides a patient is suicidal. But it’s clear that recognizing risk, and creating further interactions between patients and doctors, could have a huge impact in preventing suicide. McKernan says research she conducted on patients with chronic pain, done over a 20-year period (1998 to 2017), found that those who have a history of self-harm and whom the algorithm found to be at higher risk of suicidal thoughts spent far less time with medical professionals over the decades than those at lower risk of suicide. The results, which are due to be published in Arthritis Care & Research, show correlation rather than causation—after all, it may be that people at lower risk of suicide were more willing to engage with doctors—but nevertheless highlights a factor for doctors to analyze and consider. “It highlighted the importance of being in contact with [health care] providers,” McKernan says, and can “help direct what interventions we want to design,” like the programs Walsh is currently refining.

Though algorithms will undoubtedly inform and benefit patient care, McKernan is adamant that humans must hold ultimate responsibility for the overall assessment and treatment of a patient. The decision to keep a person under forced health care, for their own protection but potentially against their will, is extremely ethically sensitive. “When you hospitalize someone under those circumstances, you remove personal autonomy,” she says. A decision to override a patient’s claim that they’re not at risk, whether made by doctor or machine, must only happen under special and rare circumstances. “I’m not comfortable with the idea that an algorithm could override clinical judgment,” McKernan says.

Although the data behind every individual decision is based on too complex an analysis to identify any single factor for suicide risk, the algorithm can point to broad overall trends. For example, Walsh noticed a correlation between risk of suicide and melatonin prescriptions. While it’s unlikely that melatonin causes suicidal thoughts, the prescription is likely an indication of trouble sleeping, which itself is a key factor in suicidal risk.

ZACK ROSEBRUGH FOR QUARTZ

Ultimately, use of algorithms in a medical setting should be focused on developing more informed, data-driven, health care practices, rather than instituting hierarchical decision-making. “All models have times when they’re wrong,” says Walsh. “We need to learn from those examples as well.” Any prediction model should improve with time. If a physician overrides an algorithm’s decision and decides a patient is not at high suicide risk, that information can be fed back into the program to make it more accurate in the future. If the patient does later die by suicide, that should also be relayed to both algorithm and physician, with the hope that both will continue to improve their prediction accuracy.

The point is to inform a physician’s judgment, and potentially help doctors reconsider how they evaluate risk. “As humans, we get blinded by our own assumptions,” says Taylor. “People who are very depressed can be very good actors.” Someone who’s at risk of suicide can be attractive, successful, and smiling. Machines, unlike humans, aren’t misled by these distracting details and, thanks to their unique ability to focus on data rather than human traits, have an an uncanny ability to analyze and predict human behavior. Simply by virtue of thinking like a machine rather than a human, the algorithms can know us far better than we do ourselves.

Susanne’s discovery re ‘How artificial intelligence could predict suicide’.

I am confused. Why is it that we are constantly being implored, by those who we assume know what they are doing in areas of suicide prevention/mental health, to come out and confide how we are feeling, and now even more invasively, get artificially assessed by an algorithm whenever we go to A&E, BUT when people do, they are either disbelieved, called attention-seeking, or at the best, given a prescription and a talking to. There are two sides to this and they don’t seem to meet in the middle with common sense.

Point 1: Until understanding AKATHISIA gets enforced in training of ALL mental health professionals, we will get more and more suicides. We do not need algorithms to show us this, it’s obvious when someone has AKATHISIA, you just have to look at them, hardly too difficult surely, so long as you know what AKATHISIA looks like.

Point 2: one wonders how the algorithm will work on those taking RoAccutane isotretinoin. Many have sudden suicide attempts out of the blue. Very interesting to see how many predictions there’d be.

What causes me confusion, (because this happened before my son’s suicide):

if a relative goes to their own GP and confides that their loved one repeatedly tells them that they want to die, and the relative fears this may happen very soon, so asks for advice on how to save them, couldn’t the GP do more than say ‘ tell them to go and talk to their own GP’ when they’ve already done this to no avail. The loved one’s GP has not understood how serious the situation is, they are not looking at the obvious AKATHISIA and getting the message before it’s too late. It is terrible for a parent to be well aware that a suicide is drawing near, but find no help anywhere to save the loved one except more medication which may make them feel worse and even less in control of their own judgement. There is now the excellent Gloucestershire independent service SUICIDE CRISIS, who are confidential, immediate, and relying on support through understanding what’s so intolerable in the life of the person that is driving them to want to end it. But SUICIDE CRISIS, a charity, exists only in that county. The Parliamentary Health Committee on prevention of suicide highly applaud their work, but we need that system of independent suicide prevention everywhere. Immediate help confidentially, not hamstrung by ‘guidelines’ but just driven by a desire to see things as they really are and do something common-sensibly about it. It took someone who’s suffered in the NHS system, (whilst not unfairly castigating those in it who do their best), to point out what’s needed, get a team of like-minded professionals with her, and go out and save people who are lost in pain and frustration. In other words, she offered common sense and would not be deflected in her efforts, despite opposition from many in control of the NHS who found her success hard to stomach. No need for crappy algorithms, committees, buzzwords, ‘new initiatives’ just open eyes and a genuine desire to actually do something.

Would have been great for Stephen and my own son.

Agree wholeheartedly with everything you say here Heather. Just wondering whether the Gloucestershire Suicide Crisis group listen to those concerned FOR the person in crisis or just to the suicidal individual? Listening to those close to the suffering individual, who can often give vital information about the crisis, is vital, in my opinion, if we are ever going to improve matters in this respect. The individual is so intent on actually existing that s/he often lacks the vocabulary to adequately describe their feelings. Working together seems to be the obvious way forward but rarely seen when a person is in crisis – the individual becomes the centre of all attention and anyone else’s input is seen as irrelevant. Hardly the case when WE are the ones who see the extent of suffering that’s going on 24/7 – yes, I mean 24/7 as if you take yourself away from the situation for a few minutes, all could be lost. How easy it is for the ‘professionals’ to sit in for a snapshot of what’s going on – especially when the suffering individual will often manage to hold themselves under control whilst at such an appointment!

The work of this Suicide Crisis group should certainly be shared far and wide – but, actually, shouldn’t we be able to expect this type of treatment from our local mental health teams?

As for AI predicting the likelihood of depression or suicide – it sounds every bit as stupid an idea as the introduction of specific mental health teaching in our schools. Both will certainly end up with even more prescriptions for antidepressants and do nothing to improve the wellbeing of any of us. In schools, clinics, homes etc., we need time to share our feelings with one another without prejudice – to be accepted as we are and supported with empathy when that’s needed. That should not be confined to a ‘lesson’ in school nor a few minutes in clinic. If we, humans, fail to support one another then we leave the door wide open for the introduction of AI to do it for us. We humans are accustomed to ‘frail moments’ and, therefore, are capable of empathy. AI will certainly be found lacking in that respect .

When relying on AI in collaboration with health workers what will happen is that we will lose control of our lives even more so than now. Check out how many agencies are involved in gathering personal intimate information before coming to a decision to be handled by health workers- who would not have access to or the knowledge to follow the cascades involved in the logarithm used to determine the potential control over someone;s life. If they disagree or resist the conclusion ,medics are going to be just as much likely to lose a job as if they breach NICE guidelines. especially when whereas guidelines are described as ‘advice’ and have some lee way, the AI decision would have much more clout by then -If someone they perceive as becoming suicidal (that has gone wrong already) and on the receiving end of this kind of scrutiny resists – we can imagine the consequences – it won’t be loving kindness with an empathetic person of their choice – someone they can trust and relate to – it will be more drugs and coercion. There is a good reason why individuals wisely do hold themselves in control when they are subjected to the sort of appointments common in the mental health settings as you describe Mary.. Given the godlike power they have over our lives,compulsory detention, enforced drugging – ‘I did it because AI says so ‘ would give them even more protection to abuse that power – and keep a job.. I would not support someone who puts their job before resisting an unreliable NICE or an AI judgement, Empathy goes out the window too often when things go wrong and families are left relying on courts

S

I think you’re missing my point. Yes AI in the form of the kind of Apps we have now would be a disaster. But robots like google cars are now learning. Get a google doc soon, and give it some leeway to kill some people early on it will soon keep more people alive – and it will not be by more prescribing. It will not put you drugs you can’t get off in part because it will want to ensure you are never on as many as 5 drugs and it will not keep to guidelines based on fake/junk articles – it will learn that it makes sense to listen to the patient

The insurance industry will then decide who sees you next – google or the doctor – and doctors will be out of business

D

Mary,

SUICIDE CRISIS’s founder Joy Hibbins spoke a while ago on Woman’s Hour, BBC Radio 4. She totally accepts that working with the family, where the patient is not actively asking her not to, is vital. Her wonderful book, SUICIDE CRISIS TECHNIQUES came out early this year, you can get it on Amazon and all monies from sales go into her charity. I took a copy of that book to the recent conference on Compassionate Mental Health in Hereford where Robert Whitaker was the main speaker, and gave it to him and he says he may use it in a symposium on Suicide Prevention soon to take place in the USA.

Joy uses common sense. She takes NO funding from Government or NHS so she is not hampered by NICE guidelines. She offers immediate help when a suicidal person contacts her organisation via their website where the number to call is clear. She or her well trained team either meet the person in her discreetly sited rooms easily accessible in town, or she and her team will go out to their home. She listens, she tries to keep the same two people working with the individual so they don’t have to keep regurgitating their traumatic story over and over to different people (Olly had 26 different ‘care’ workers he had to tell his story to, reliving the PTSD of his horrendous school experiences (at the hands of mental and physical bullies). He had earlier found EMDR as a therapy no good because he had to re-live those experiences in his head. So for many people repairing them over and over make them worse.

That often applies by the way, to parents who’ve lost an offspring to Suicide. In my case, reliving the awful events, (bullying by ignorant psychiatrist to us and to Olly, witnessed by The Team, the effects of Sertraline on ruining Olly’s capacity for engagement and rational thought, leading to death), makes me feel desperately low. It does NOT help.

I can’t get justice on those issues, having invested endless time and money on them, so I need to shut them down in my head whenever it’s not necessary to bring them forward. So does the suicidal person, once they’ve explained their problems to the SUICIDE CRISIS member of the team. They focus on the future, a plan for survival, a way to find hope for a life worth living. And they are there for the long haul. Not with as much intensity at the start, but if the person has a blip during recovery, they know they can ring up and go back for a chat. Often that’s all they need, to set them on their constructive ‘upward and onward’way again.

Olly’s Charity puts all they can into this wonderful charity. I think it is the way through, it’s common sense and it is really making a difference, against sadly, an amount of negative sniping by those in officialdom who can’t match their success in figures of saving lives since 2012. They negate Joy because she has ‘lived’ experience. How utterly stupid is that! But in the end, look at the data, look at the numbers of lives saved. I think it stands at none lost of those who’ve approached them since 2012 when their service began. I could be wrong, but till lately that was definitely the case. We are actively trying to get this service into Worcester and other towns in UK.

At present what doctors learn from a case like Stephens is that not keeping to the Guidelines means they lose their job – or no matter what happens to the patient if they keep to the Guidelines nothing will happen to them.

Very soon when we have robots who can learn, if they are programmed to save lives rather than keep to Guidelines, they will likely do better than doctors – precisely because they will listen.

If there was any evidence of doctors exercising any empathy, perhaps things would be different

D

We are a very defective species – I recall a good many years ago the editor of the Journal of Medical Ethics arguing in BMJ that doctors should not be allowed to exercise their consciences.

https://www.bmj.com/content/332/7536/294

Savulescu cited a speech of Shakespeare’s Richard III not realising that he was supposed to be a villain (maybe a case of an overspecialised technocrat). Many professional readers were appalled and probably they felt insulted and yet you felt that most of the time there was already far too much regimentation – perhaps part of the problem is that medical students really have to do as they are told and they get used to it. That said I have met a lot of kind doctors in the last three year and they have kept me alive.

But people like Savulescu seem to be there to turn decent people into barbarians:

https://blogs.bmj.com/medical-ethics/2012/03/02/an-open-letter-from-giubilini-and-minerva/

Jonathan Swift at least was being ironic when he wrote his Modest Proposal.

Let’s not forget it’s a rotten barrel not a rotten apple issue. Listening should always happen and is a good place to start. Findings a way to take back the power from drug companies is essential to solving the issue. I like to think the opioid crisis like the exposure with big tobacco and harms of cigarettes is the start of a pie crumbling. Big giants are hard to tumble but when they tumble they seem to come down in a heap. They’ll reassemble and find a different target but they’re exposed. We aren’t making people better with ten drugs. This is obvious to many but change takes a long time to happen and filter down. Most doctors, practitioners want to do what will help their patient. The message needs to change. Less is more. Dr. Healy should be in the curriculum of a core course on de prescribing for all med students.